"We now have a new dimension to the attribution problem. It’s increasingly unclear whether we will always be able to distinguish between the contributions of human scientists and those of their artificial collaborators – the AI tools that are already helping push forward the boundaries of our knowledge."

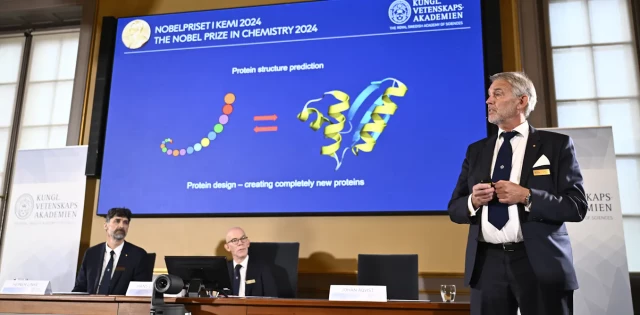

It is interesting that both the Physics and Chemistry Nobel prizes for 2024 involved machine learning (aka AI).

#machinelearning #nobelprize #physics #chemistry

AI was central to two of 2024’s Nobel prize categories. It’s a sign of things to come

AI will feature in future Nobel prizes as scientists exploit the power of this technology for research.The Conversation

This entry was edited (3 weeks ago)

FediThing 🏳️🌈

in reply to David Meyer • • •" the AI tools that are already helping push forward the boundaries of our knowledge.""

If they're talking about LLMs, they're not tools and they're not pushing anything forward, they're just planet-destroying plagiarism machines without any ideas of their own. The professor who wrote this article must surely realise this?

David Meyer

in reply to FediThing 🏳️🌈 • • •With respect to the physics prize, it was given for Hinton and Hopfield's (independent) fundamental work on neural networks. It's hard to say this has nothing to do with LLMs because they (LLMs) couldn't be trained without back-prop (discovered by Hinton, et. al [3]).

The AlphaFold2 part of the chemistry prize uses neural networks with attention (like LLMs) [1,2], but it is not clear to me from what I've been able to read that there is any issue of plagiarism; someone please correct me if I'm wrong.

Finally, an LLM is just a kind of recurrent neural network with attention, which is a pretty general thing [2].

All of this is "as far as I know". I'm not an expert.

References

--------------

[1] "AlphaFold2 and its applications in the fields of biology and medicine", https://www.nature.com/articles/s41392-023-01381-z

[2] "Attention Is All You Need", https://arxiv.org/abs/1706.03762

[3] "Learning representations by back-propagating errors", https://www.nature.com/articles/323533a0

Attention Is All You Need

arXiv.orgFediThing 🏳️🌈

in reply to David Meyer • • •I'm not a scientist so I don't really dare dispute anything you say, but sentences like this from the article are a bit concerning:

"Training a machine-learning system involves exposing it to vast amounts of data, often from the internet. "

...that doesn't sound like it's seeking opt-in permission for whatever it ingests?

The article gives the impression that AI tools come up with ideas independently, but also mentions ChatGPT as an example, which definitely is a self-contradicting plagiarism system that doesn't create original thoughts.