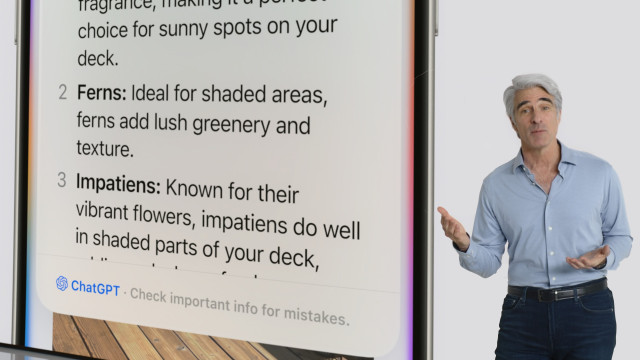

The fact that Apple's implementation of #ChatGPT includes a rather prominent "Check important info for mistakes." warning at the bottom of each output adequately sums up my issues with LLMs. Why use, let alone rely, on a tool that is so prone to fail? I wouldn't eat a meal that was labelled with "Check food of edibility". There are uses for this tech, for example the proofreading feature they demoed. But as an information source the #LLM still lacks trust.

BeAware :veriweed:

in reply to The Hat Fox • • •